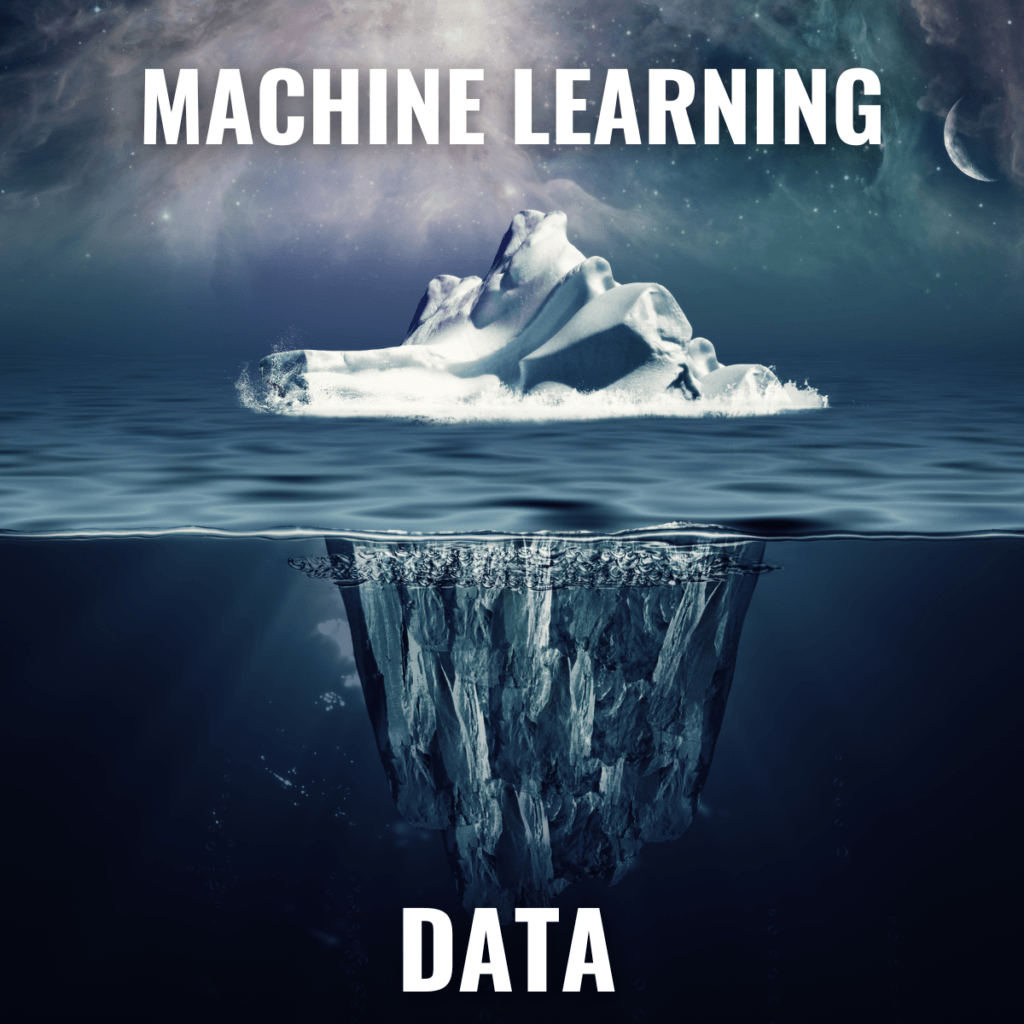

Artificial Intelligence (AI) is more about data than models. Data-centric approaches turn out to be more effective than model-centric approaches. But, AI models require a large amount of labeled data to perform well on a given task. Gathering and labeling data for usual tasks like dog-cat classification is pretty straightforward. Many images can be obtained from the internet and any human can label them. The labeler does not need to be an expert since each human knows how cats and dogs look like.

Fig. 1

But this becomes a bit tricky in the case of medical data. First and foremost, the digitization of medical data has just begun, so we don’t find medical records easily on the internet. Secondly, even if they are available online, they can’t be made public due to privacy issues. Such data first needs to be de-identified and then can be used to train AI models. So in short, gathering such huge volumes of medical data is itself an enormous task. Once we have volumes of medical data, the next momentous task is to label the data.

Labeling medical data is a very time-consuming process. The annotators first need to be trained by a medical expert on how to annotate a given medical sample. After training and the first round of annotation by the annotators, the labels must be reviewed multiple times by experts. Only then, can they be used to train the AI model. Thus, it is also evident that this process is not only time-consuming but also monetarily expensive. The medical experts need to be paid for their teaching and reviewing time, and also the annotators need to be paid for their annotations.

In short, large medical data acquisition is difficult and medical data labeling is time consuming and expensive process.

Fig. 2.

From the above discussion, we understand that building an AI pipeline in the medical domain is not just about building better models, but also about finding effective strategies to make the data labeling process faster. One such strategy is ‘Active Learning’. Active Learning (AL) attempts to maximize the model’s performance gains while annotating the fewest samples possible.

Fig. 3. An example of deep active learning. Image source1.

Active Learning comprises the following steps:

- Gather a large amount of unlabeled training data.

- Label a small amount of the gathered data.

- Train a model on this labeled data.

- Using the trained model, select tough samples from the pool of unlabeled data.

- Label only the tough samples using human annotators.

- Now, train a model again using the previously annotated and the newly annotated data.

Because only the samples that are tough to model are annotated, the model learns much more efficiently. Models have been shown to achieve better performance by using less amount of labeled data when the samples in the training set are obtained by active learning rather than random sampling2. At Synapsica, we plan to implement this pipeline that would significantly increase the rate at which we obtain good-performing AI models.

For the technical readers, the question that might arise: How do we know which unlabeled samples are tough for the model? Different papers have proposed different sampling techniques to quantify the uncertainty in the prediction of the model. One of the most widely used sampling techniques is uncertainty sampling. Mathematically, it is represented as below:

where yi is the predicted probability of the sample x being of class i. Depending on the resources, the top 10% or top 20% samples having a maximum of the above value can be sampled from the unlabeled data and passed onto the annotators to get those annotated. The above formula comes from Shannon Entropy in Information Theory.

In this article, initially, we discussed the challenges in medical data collection and labeling. Later, we discussed how active learning can be used to train models only on 10 – 20% of labeled data and still perform on par with the models trained on 100 % labeled data. Thus, active learning can play a significant role in building good models faster by reducing the workload of collecting and annotating more data.

References

Interested readers can look into these resources:

- Ren et. al., A Survey of Deep Active Learning, 2020.

- Beluch et. al., The power of ensembles for active learning in image classification, CVPR 2018.

- Yarin Gal et. al., Deep Bayesian Active Learning with Image Data, ICML 2017.